Question #391

HOTSPOT

-

Case study

-

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

-

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

-

Munson’s Pickles and Preserves Farm is an agricultural cooperative corporation based in Washington, US, with farms located across the United States. The company supports agricultural production resources by distributing seeds fertilizers, chemicals, fuel, and farm machinery to the farms.

Current Environment

-

The company is migrating all applications from an on-premises datacenter to Microsoft Azure. Applications support distributors, farmers, and internal company staff.

Corporate website

-

• The company hosts a public website located at http://www.munsonspicklesandpreservesfarm.com. The site supports farmers and distributors who request agricultural production resources.

Farms

-

• The company created a new customer tenant in the Microsoft Entra admin center to support authentication and authorization for applications.

Distributors

-

• Distributors integrate their applications with data that is accessible by using APIs hosted at http://www.munsonspicklesandpreservesfarm.com/api to receive and update resource data.

Requirements

-

The application components must meet the following requirements:

Corporate website

-

• The site must be migrated to Azure App Service.

• Costs must be minimized when hosting in Azure.

• Applications must automatically scale independent of the compute resources.

• All code changes must be validated by internal staff before release to production.

• File transfer speeds must improve, and webpage-load performance must increase.

• All site settings must be centrally stored, secured without using secrets, and encrypted at rest and in transit.

• A queue-based load leveling pattern must be implemented by using Azure Service Bus queues to support high volumes of website agricultural production resource requests.

Farms

-

• Farmers must authenticate to applications by using Microsoft Entra ID.

Distributors

-

• The company must track a custom telemetry value with each API call and monitor performance of all APIs.

• API telemetry values must be charted to evaluate variations and trends for resource data.

Internal staff

-

• App and API updates must be validated before release to production.

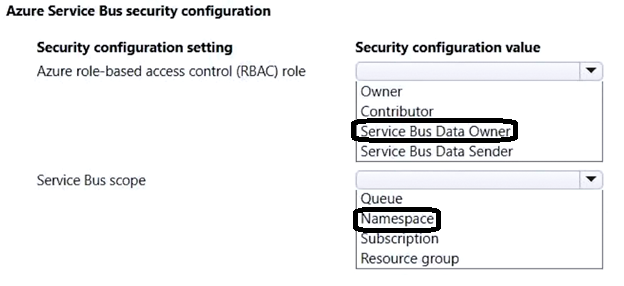

• Staff must be able to select a link to direct them back to the production app when validating an app or API update.

• Staff profile photos and email must be displayed on the website once they authenticate to applications by using their Microsoft Entra ID.

Security

-

• All web communications must be secured by using TLS/HTTPS.

• Web content must be restricted by country/region to support corporate compliance standards.

• The principle of least privilege must be applied when providing any user rights or process access rights.

• Managed identities for Azure resources must be used to authenticate services that support Microsoft Entra ID authentication.

Issues

-

Corporate website

-

• Farmers report HTTP 503 errors at the same time as internal staff report that CPU and memory usage are high.

• Distributors report HTTP 502 errors at the same time as internal staff report that average response times and networking traffic are high.

• Internal staff report webpage load sizes are large and take a long time to load.

• Developers receive authentication errors to Service Bus when they debug locally.

Distributors

-

• Many API telemetry values are sent in a short period of time. Telemetry traffic, data costs, and storage costs must be reduced while preserving a statistically correct analysis of the data points sent by the APIs.

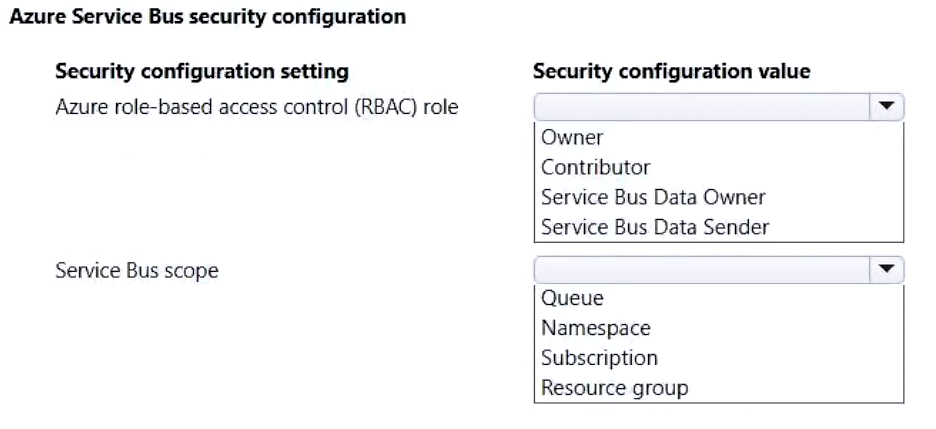

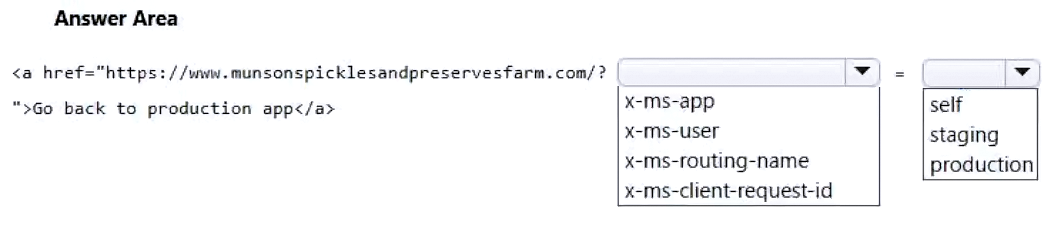

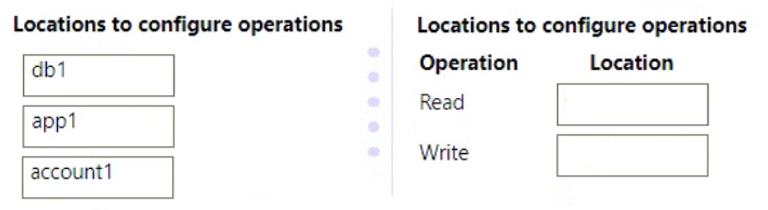

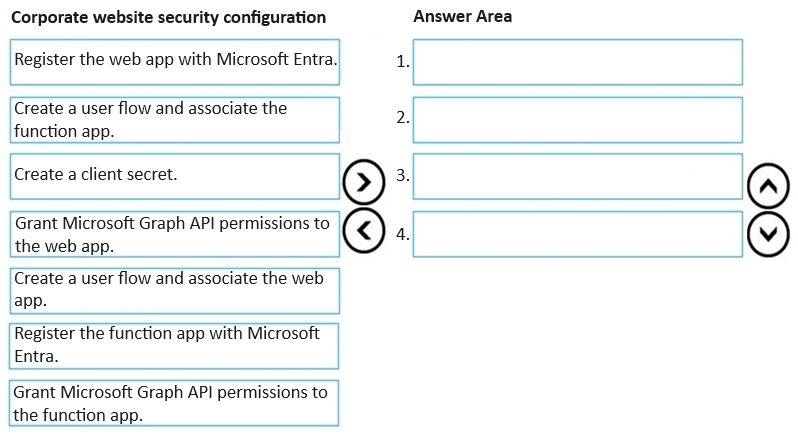

You need to resolve the authentication errors for developers.

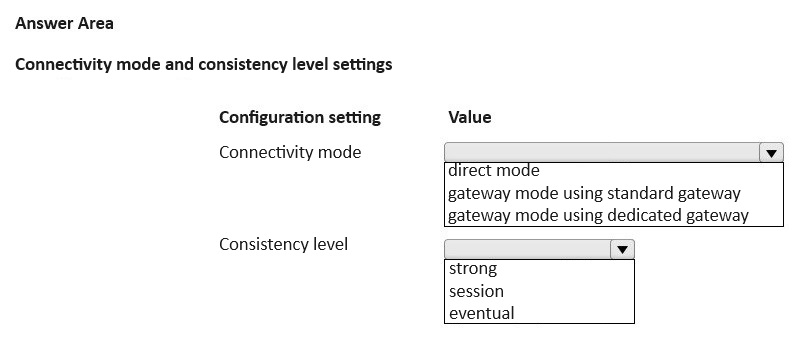

Which Service Bus security configuration should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

-

Case study

-

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

-

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

-

Munson’s Pickles and Preserves Farm is an agricultural cooperative corporation based in Washington, US, with farms located across the United States. The company supports agricultural production resources by distributing seeds fertilizers, chemicals, fuel, and farm machinery to the farms.

Current Environment

-

The company is migrating all applications from an on-premises datacenter to Microsoft Azure. Applications support distributors, farmers, and internal company staff.

Corporate website

-

• The company hosts a public website located at http://www.munsonspicklesandpreservesfarm.com. The site supports farmers and distributors who request agricultural production resources.

Farms

-

• The company created a new customer tenant in the Microsoft Entra admin center to support authentication and authorization for applications.

Distributors

-

• Distributors integrate their applications with data that is accessible by using APIs hosted at http://www.munsonspicklesandpreservesfarm.com/api to receive and update resource data.

Requirements

-

The application components must meet the following requirements:

Corporate website

-

• The site must be migrated to Azure App Service.

• Costs must be minimized when hosting in Azure.

• Applications must automatically scale independent of the compute resources.

• All code changes must be validated by internal staff before release to production.

• File transfer speeds must improve, and webpage-load performance must increase.

• All site settings must be centrally stored, secured without using secrets, and encrypted at rest and in transit.

• A queue-based load leveling pattern must be implemented by using Azure Service Bus queues to support high volumes of website agricultural production resource requests.

Farms

-

• Farmers must authenticate to applications by using Microsoft Entra ID.

Distributors

-

• The company must track a custom telemetry value with each API call and monitor performance of all APIs.

• API telemetry values must be charted to evaluate variations and trends for resource data.

Internal staff

-

• App and API updates must be validated before release to production.

• Staff must be able to select a link to direct them back to the production app when validating an app or API update.

• Staff profile photos and email must be displayed on the website once they authenticate to applications by using their Microsoft Entra ID.

Security

-

• All web communications must be secured by using TLS/HTTPS.

• Web content must be restricted by country/region to support corporate compliance standards.

• The principle of least privilege must be applied when providing any user rights or process access rights.

• Managed identities for Azure resources must be used to authenticate services that support Microsoft Entra ID authentication.

Issues

-

Corporate website

-

• Farmers report HTTP 503 errors at the same time as internal staff report that CPU and memory usage are high.

• Distributors report HTTP 502 errors at the same time as internal staff report that average response times and networking traffic are high.

• Internal staff report webpage load sizes are large and take a long time to load.

• Developers receive authentication errors to Service Bus when they debug locally.

Distributors

-

• Many API telemetry values are sent in a short period of time. Telemetry traffic, data costs, and storage costs must be reduced while preserving a statistically correct analysis of the data points sent by the APIs.

You need to resolve the authentication errors for developers.

Which Service Bus security configuration should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Question #392

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are implementing an application by using Azure Event Grid to push near-real-time information to customers.

You have the following requirements:

• You must send events to thousands of customers that include hundreds of various event types.

• The events must be filtered by event type before processing.

• Authentication and authorization must be handled by using Microsoft Entra ID.

• The events must be published to a single endpoint.

You need to implement Azure Event Grid.

Solution: Publish events to an event domain. Create a custom topic for each customer.

Does the solution meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are implementing an application by using Azure Event Grid to push near-real-time information to customers.

You have the following requirements:

• You must send events to thousands of customers that include hundreds of various event types.

• The events must be filtered by event type before processing.

• Authentication and authorization must be handled by using Microsoft Entra ID.

• The events must be published to a single endpoint.

You need to implement Azure Event Grid.

Solution: Publish events to an event domain. Create a custom topic for each customer.

Does the solution meet the goal?

Question #393

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are implementing an application by using Azure Event Grid to push near-real-time information to customers.

You have the following requirements:

• You must send events to thousands of customers that include hundreds of various event types.

• The events must be filtered by event type before processing.

• Authentication and authorization must be handled by using Microsoft Entra ID.

• The events must be published to a single endpoint.

You need to implement Azure Event Grid.

Solution: Publish events to a custom topic. Create an event subscription for each customer.

Does the solution meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are implementing an application by using Azure Event Grid to push near-real-time information to customers.

You have the following requirements:

• You must send events to thousands of customers that include hundreds of various event types.

• The events must be filtered by event type before processing.

• Authentication and authorization must be handled by using Microsoft Entra ID.

• The events must be published to a single endpoint.

You need to implement Azure Event Grid.

Solution: Publish events to a custom topic. Create an event subscription for each customer.

Does the solution meet the goal?

Question #394

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are implementing an application by using Azure Event Grid to push near-real-time information to customers.

You have the following requirements:

• You must send events to thousands of customers that include hundreds of various event types.

• The events must be filtered by event type before processing.

• Authentication and authorization must be handled by using Microsoft Entra ID.

• The events must be published to a single endpoint.

You need to implement Azure Event Grid.

Solution: Enable ingress, create a TCP scale rule, and apply the rule to the container app.

Does the solution meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are implementing an application by using Azure Event Grid to push near-real-time information to customers.

You have the following requirements:

• You must send events to thousands of customers that include hundreds of various event types.

• The events must be filtered by event type before processing.

• Authentication and authorization must be handled by using Microsoft Entra ID.

• The events must be published to a single endpoint.

You need to implement Azure Event Grid.

Solution: Enable ingress, create a TCP scale rule, and apply the rule to the container app.

Does the solution meet the goal?

Question #395

HOTSPOT

-

Case study

-

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

-

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

-

Munson’s Pickles and Preserves Farm is an agricultural cooperative corporation based in Washington, US, with farms located across the United States. The company supports agricultural production resources by distributing seeds fertilizers, chemicals, fuel, and farm machinery to the farms.

Current Environment

-

The company is migrating all applications from an on-premises datacenter to Microsoft Azure. Applications support distributors, farmers, and internal company staff.

Corporate website

-

• The company hosts a public website located at http://www.munsonspicklesandpreservesfarm.com. The site supports farmers and distributors who request agricultural production resources.

Farms

-

• The company created a new customer tenant in the Microsoft Entra admin center to support authentication and authorization for applications.

Distributors

-

• Distributors integrate their applications with data that is accessible by using APIs hosted at http://www.munsonspicklesandpreservesfarm.com/api to receive and update resource data.

Requirements

-

The application components must meet the following requirements:

Corporate website

-

• The site must be migrated to Azure App Service.

• Costs must be minimized when hosting in Azure.

• Applications must automatically scale independent of the compute resources.

• All code changes must be validated by internal staff before release to production.

• File transfer speeds must improve, and webpage-load performance must increase.

• All site settings must be centrally stored, secured without using secrets, and encrypted at rest and in transit.

• A queue-based load leveling pattern must be implemented by using Azure Service Bus queues to support high volumes of website agricultural production resource requests.

Farms

-

• Farmers must authenticate to applications by using Microsoft Entra ID.

Distributors

-

• The company must track a custom telemetry value with each API call and monitor performance of all APIs.

• API telemetry values must be charted to evaluate variations and trends for resource data.

Internal staff

-

• App and API updates must be validated before release to production.

• Staff must be able to select a link to direct them back to the production app when validating an app or API update.

• Staff profile photos and email must be displayed on the website once they authenticate to applications by using their Microsoft Entra ID.

Security

-

• All web communications must be secured by using TLS/HTTPS.

• Web content must be restricted by country/region to support corporate compliance standards.

• The principle of least privilege must be applied when providing any user rights or process access rights.

• Managed identities for Azure resources must be used to authenticate services that support Microsoft Entra ID authentication.

Issues

-

Corporate website

-

• Farmers report HTTP 503 errors at the same time as internal staff report that CPU and memory usage are high.

• Distributors report HTTP 502 errors at the same time as internal staff report that average response times and networking traffic are high.

• Internal staff report webpage load sizes are large and take a long time to load.

• Developers receive authentication errors to Service Bus when they debug locally.

Distributors

-

• Many API telemetry values are sent in a short period of time. Telemetry traffic, data costs, and storage costs must be reduced while preserving a statistically correct analysis of the data points sent by the APIs.

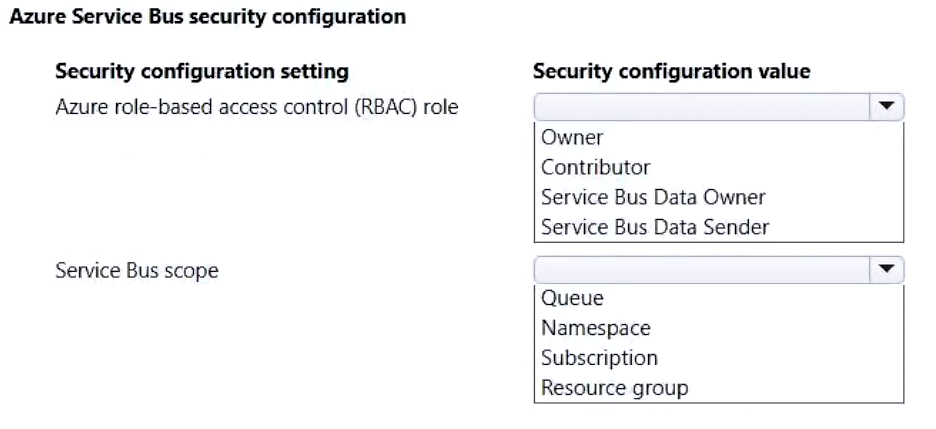

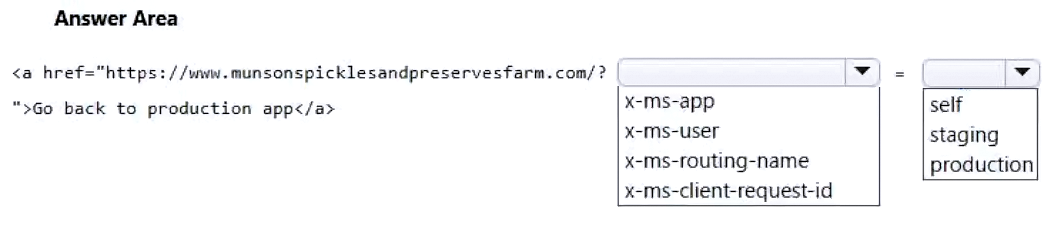

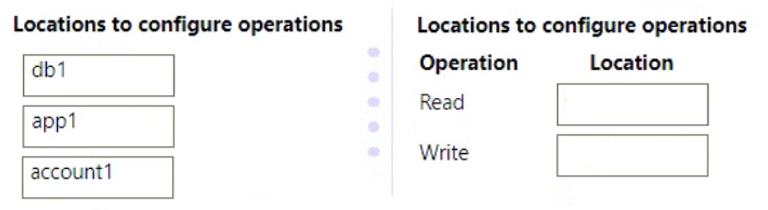

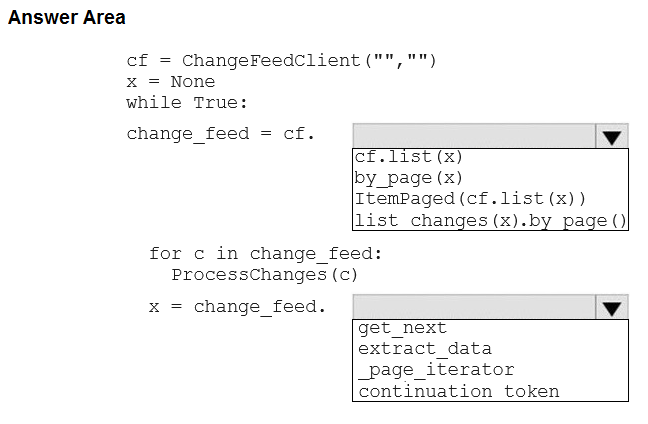

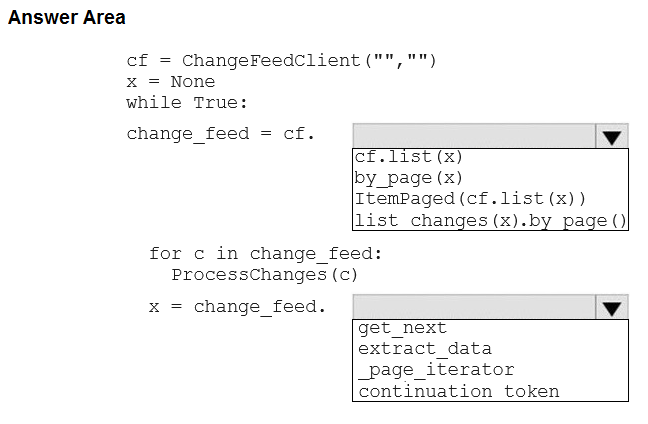

You need to provide internal staff access to the production site after a validation.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

-

Case study

-

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

-

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

-

Munson’s Pickles and Preserves Farm is an agricultural cooperative corporation based in Washington, US, with farms located across the United States. The company supports agricultural production resources by distributing seeds fertilizers, chemicals, fuel, and farm machinery to the farms.

Current Environment

-

The company is migrating all applications from an on-premises datacenter to Microsoft Azure. Applications support distributors, farmers, and internal company staff.

Corporate website

-

• The company hosts a public website located at http://www.munsonspicklesandpreservesfarm.com. The site supports farmers and distributors who request agricultural production resources.

Farms

-

• The company created a new customer tenant in the Microsoft Entra admin center to support authentication and authorization for applications.

Distributors

-

• Distributors integrate their applications with data that is accessible by using APIs hosted at http://www.munsonspicklesandpreservesfarm.com/api to receive and update resource data.

Requirements

-

The application components must meet the following requirements:

Corporate website

-

• The site must be migrated to Azure App Service.

• Costs must be minimized when hosting in Azure.

• Applications must automatically scale independent of the compute resources.

• All code changes must be validated by internal staff before release to production.

• File transfer speeds must improve, and webpage-load performance must increase.

• All site settings must be centrally stored, secured without using secrets, and encrypted at rest and in transit.

• A queue-based load leveling pattern must be implemented by using Azure Service Bus queues to support high volumes of website agricultural production resource requests.

Farms

-

• Farmers must authenticate to applications by using Microsoft Entra ID.

Distributors

-

• The company must track a custom telemetry value with each API call and monitor performance of all APIs.

• API telemetry values must be charted to evaluate variations and trends for resource data.

Internal staff

-

• App and API updates must be validated before release to production.

• Staff must be able to select a link to direct them back to the production app when validating an app or API update.

• Staff profile photos and email must be displayed on the website once they authenticate to applications by using their Microsoft Entra ID.

Security

-

• All web communications must be secured by using TLS/HTTPS.

• Web content must be restricted by country/region to support corporate compliance standards.

• The principle of least privilege must be applied when providing any user rights or process access rights.

• Managed identities for Azure resources must be used to authenticate services that support Microsoft Entra ID authentication.

Issues

-

Corporate website

-

• Farmers report HTTP 503 errors at the same time as internal staff report that CPU and memory usage are high.

• Distributors report HTTP 502 errors at the same time as internal staff report that average response times and networking traffic are high.

• Internal staff report webpage load sizes are large and take a long time to load.

• Developers receive authentication errors to Service Bus when they debug locally.

Distributors

-

• Many API telemetry values are sent in a short period of time. Telemetry traffic, data costs, and storage costs must be reduced while preserving a statistically correct analysis of the data points sent by the APIs.

You need to provide internal staff access to the production site after a validation.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Question #396

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are implementing an application by using Azure Event Grid to push near-real-time information to customers.

You have the following requirements:

• You must send events to thousands of customers that include hundreds of various event types.

• The events must be filtered by event type before processing.

• Authentication and authorization must be handled by using Microsoft Entra ID.

• The events must be published to a single endpoint.

You need to implement Azure Event Grid.

Solution: Publish events to a partner topic. Create an event subscription for each customer.

Does the solution meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are implementing an application by using Azure Event Grid to push near-real-time information to customers.

You have the following requirements:

• You must send events to thousands of customers that include hundreds of various event types.

• The events must be filtered by event type before processing.

• Authentication and authorization must be handled by using Microsoft Entra ID.

• The events must be published to a single endpoint.

You need to implement Azure Event Grid.

Solution: Publish events to a partner topic. Create an event subscription for each customer.

Does the solution meet the goal?

Question #397

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are implementing an application by using Azure Event Grid to push near-real-time information to customers.

You have the following requirements:

• You must send events to thousands of customers that include hundreds of various event types.

• The events must be filtered by event type before processing.

• Authentication and authorization must be handled by using Microsoft Entra ID.

• The events must be published to a single endpoint.

You need to implement Azure Event Grid.

Solution: Publish events to a system topic. Create an event subscription for each customer.

Does the solution meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are implementing an application by using Azure Event Grid to push near-real-time information to customers.

You have the following requirements:

• You must send events to thousands of customers that include hundreds of various event types.

• The events must be filtered by event type before processing.

• Authentication and authorization must be handled by using Microsoft Entra ID.

• The events must be published to a single endpoint.

You need to implement Azure Event Grid.

Solution: Publish events to a system topic. Create an event subscription for each customer.

Does the solution meet the goal?

Question #398

DRAG DROP

-

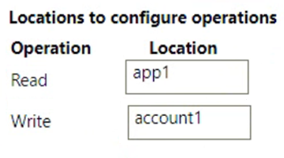

You have an Azure Cosmos DB for NoSQL API account named account1 and a database named db1. An application named app1 will access db1 to perform read and write operations.

You plan to modify the consistency levels for read and write operations performed by app1 on db1.

You must enforce the consistency level on a per-operation basis whenever possible.

You need to configure the consistency level for read and write operations.

Which locations should you configure? To answer, move the appropriate locations to the correct operations. You may use each location once, more than once, or not at all. You may need to move the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

-

You have an Azure Cosmos DB for NoSQL API account named account1 and a database named db1. An application named app1 will access db1 to perform read and write operations.

You plan to modify the consistency levels for read and write operations performed by app1 on db1.

You must enforce the consistency level on a per-operation basis whenever possible.

You need to configure the consistency level for read and write operations.

Which locations should you configure? To answer, move the appropriate locations to the correct operations. You may use each location once, more than once, or not at all. You may need to move the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Question #399

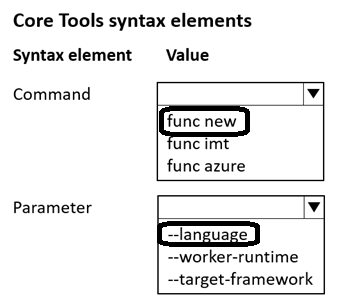

HOTSPOT

-

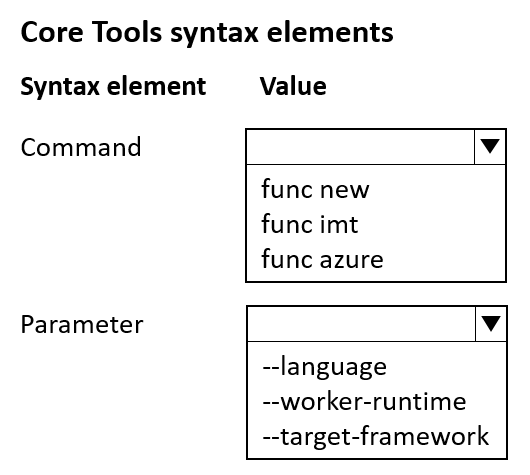

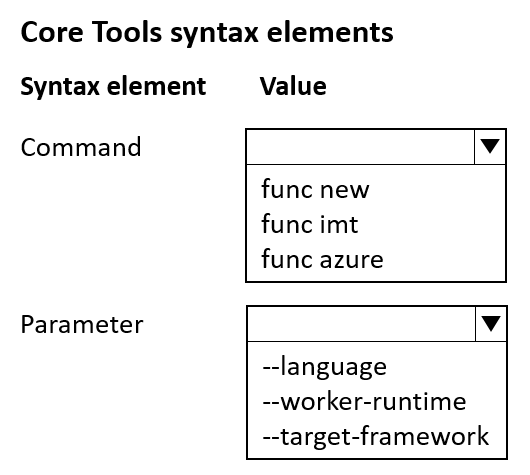

You are creating an Azure Functions app project in your local development environment by using Azure Functions Core Tools.

You must create the project in either Python or C# without using a template.

You need to specify the command and its parameter required to create the Azure Functions app project.

Which command and parameter should you specify? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

-

You are creating an Azure Functions app project in your local development environment by using Azure Functions Core Tools.

You must create the project in either Python or C# without using a template.

You need to specify the command and its parameter required to create the Azure Functions app project.

Which command and parameter should you specify? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Question #400

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure App Service plan named APSPlan1 set to the Basic B1 pricing tier. APSPlan1 contains an App Service web app named WebApp1.

You plan to enable schedule-based autoscaling for APSPlan1.

You need to minimize the cost of running WebApp1.

Solution: Scale down ASPPlan1 to the Shared pricing tier.

Does the solution meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure App Service plan named APSPlan1 set to the Basic B1 pricing tier. APSPlan1 contains an App Service web app named WebApp1.

You plan to enable schedule-based autoscaling for APSPlan1.

You need to minimize the cost of running WebApp1.

Solution: Scale down ASPPlan1 to the Shared pricing tier.

Does the solution meet the goal?

Question #401

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure App Service plan named APSPlan1 set to the Basic B1 pricing tier. APSPlan1 contains an App Service web app named WebApp1.

You plan to enable schedule-based autoscaling for APSPlan1.

You need to minimize the cost of running WebApp1.

Solution: Scale out APSPlan1.

Does the solution meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure App Service plan named APSPlan1 set to the Basic B1 pricing tier. APSPlan1 contains an App Service web app named WebApp1.

You plan to enable schedule-based autoscaling for APSPlan1.

You need to minimize the cost of running WebApp1.

Solution: Scale out APSPlan1.

Does the solution meet the goal?

Question #402

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure App Service plan named APSPlan1 set to the Basic B1 pricing tier. APSPlan1 contains an App Service web app named WebApp1.

You plan to enable schedule-based autoscaling for APSPlan1.

You need to minimize the cost of running WebApp1.

Solution: Scale up ASPPlan1 to the Premium V2 pricing tier.

Does the solution meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure App Service plan named APSPlan1 set to the Basic B1 pricing tier. APSPlan1 contains an App Service web app named WebApp1.

You plan to enable schedule-based autoscaling for APSPlan1.

You need to minimize the cost of running WebApp1.

Solution: Scale up ASPPlan1 to the Premium V2 pricing tier.

Does the solution meet the goal?

Question #403

HOTSPOT

-

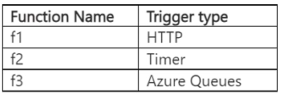

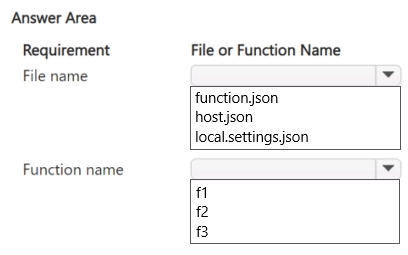

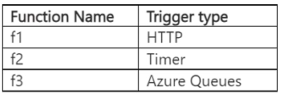

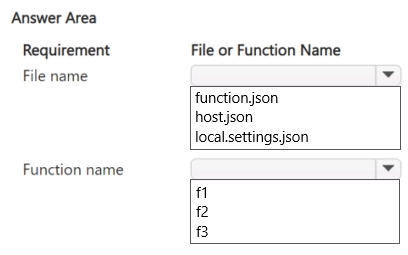

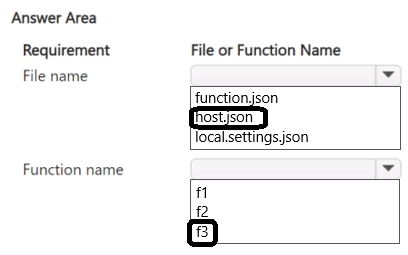

You have an Azure Functions app using the Consumption hosting plan for a company. The app contains the following functions:

You plan to enable dynamic concurrency on the app. The company requires that each function has its concurrency level managed separately.

You need to configure the app for dynamic concurrency.

Which file or function names should you use? To answer, select the appropriate values in the answer area.

NOTE: Each correct selection is worth one point.

-

You have an Azure Functions app using the Consumption hosting plan for a company. The app contains the following functions:

You plan to enable dynamic concurrency on the app. The company requires that each function has its concurrency level managed separately.

You need to configure the app for dynamic concurrency.

Which file or function names should you use? To answer, select the appropriate values in the answer area.

NOTE: Each correct selection is worth one point.

Question #404

You are developing an app to store globally distributed data in several Azure Blob Storage containers. Each container hosts multiple blobs where each instance of the app will store the data. You enable versioning and soft delete for the blobs.

App testing and incorrect code have frequently corrupted data. Development of the app must allow data to be restored to a previous day for testing.

You need to configure the storage account to support point-in-time restore.

What should you do?

App testing and incorrect code have frequently corrupted data. Development of the app must allow data to be restored to a previous day for testing.

You need to configure the storage account to support point-in-time restore.

What should you do?

Question #405

You manage an Azure subscription that contains 100 Azure App Service web apps. Each web app is associated with an individual Application Insights instance.

You plan to remove Classic availability tests from all Application Insights instances that have this functionality configured.

You have the following PowerShell statement:

Get-AzApplicationInsightsWebTest | Where-Object { $condition }

You need to set the value of the $condition variable.

Which value should you use?

You plan to remove Classic availability tests from all Application Insights instances that have this functionality configured.

You have the following PowerShell statement:

Get-AzApplicationInsightsWebTest | Where-Object { $condition }

You need to set the value of the $condition variable.

Which value should you use?

Question #406

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You deploy an Azure Container Apps app and disable ingress on the container app.

Users report that they are unable to access the container app. You investigate and observe that the app has scaled to 0 instances.

You need to resolve the issue with the container app.

Solution: Enable ingress, create an TCP scale rule, and apply the rule to the container app.

Does the solution meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You deploy an Azure Container Apps app and disable ingress on the container app.

Users report that they are unable to access the container app. You investigate and observe that the app has scaled to 0 instances.

You need to resolve the issue with the container app.

Solution: Enable ingress, create an TCP scale rule, and apply the rule to the container app.

Does the solution meet the goal?

Question #407

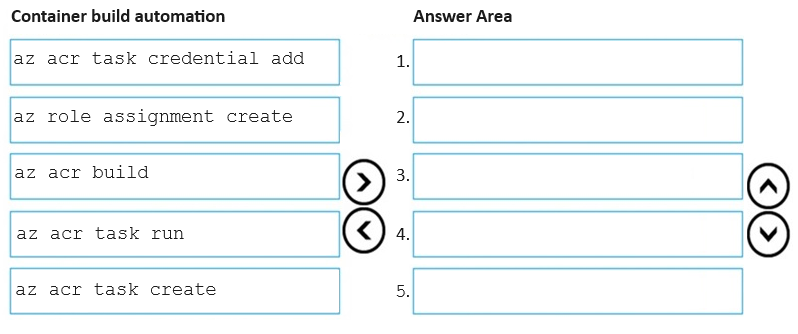

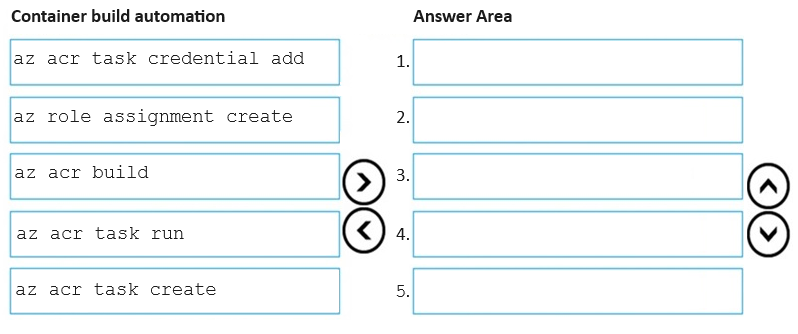

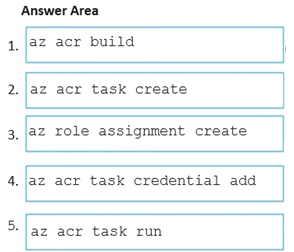

DRAG DROP

-

You have two Azure Container Registry (ACR) instances: ACR01 and ACR02.

You plan to implement a containerized application named APP1 that will use a base image named BASE1. The image for APP1 will be stored in ACR01. The image BASE1 will be stored in ACR02.

You need to automate the planned implementation by using a sequence of five Azure command-line interface (Azure CLI) commands. Your solution must ensure that the APP1 image stored in ACR01 will be automatically updated when the BASE1 image is updated.

In which order should you perform the actions? To answer, move all container build automation options from the list of container build automations to the answer area and arrange them in the correct order.

NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

-

You have two Azure Container Registry (ACR) instances: ACR01 and ACR02.

You plan to implement a containerized application named APP1 that will use a base image named BASE1. The image for APP1 will be stored in ACR01. The image BASE1 will be stored in ACR02.

You need to automate the planned implementation by using a sequence of five Azure command-line interface (Azure CLI) commands. Your solution must ensure that the APP1 image stored in ACR01 will be automatically updated when the BASE1 image is updated.

In which order should you perform the actions? To answer, move all container build automation options from the list of container build automations to the answer area and arrange them in the correct order.

NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

Question #408

A company uses an Azure Blob Storage for archiving.

The company requires that data in the Blob Storage is only in the archive tier.

You need to ensure data copied to the Blob Storage is moved to the archive tier.

What should you do?

The company requires that data in the Blob Storage is only in the archive tier.

You need to ensure data copied to the Blob Storage is moved to the archive tier.

What should you do?

Question #409

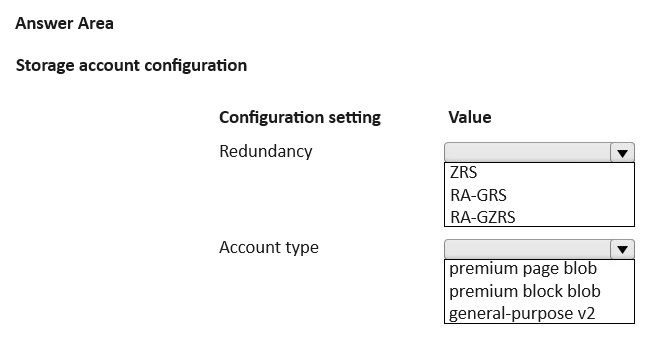

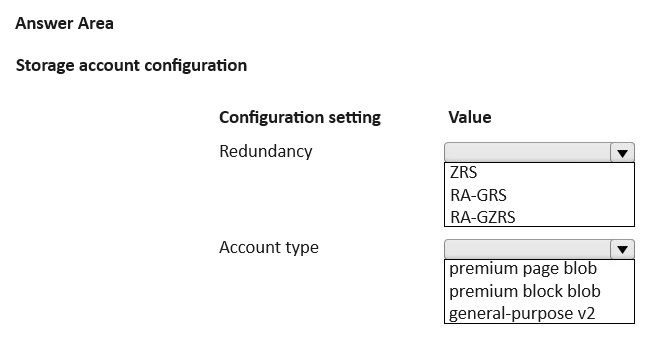

HOTSPOT

-

You have the following data lifecycle management policy:

You plan to implement an Azure Blob Storage account and apply to it Policy1. The solution should maximize resiliency and performance.

You need to configure the account to support the policy.

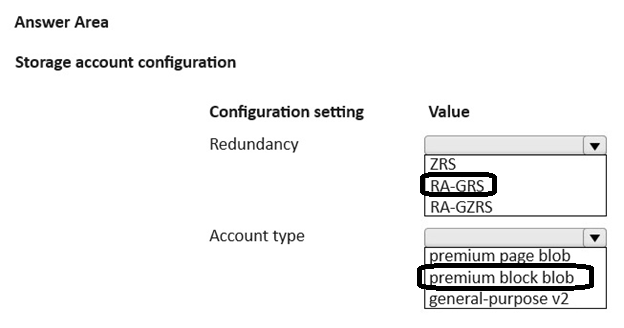

Which redundancy option and storage account type should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

-

You have the following data lifecycle management policy:

You plan to implement an Azure Blob Storage account and apply to it Policy1. The solution should maximize resiliency and performance.

You need to configure the account to support the policy.

Which redundancy option and storage account type should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Question #410

HOTSPOT

-

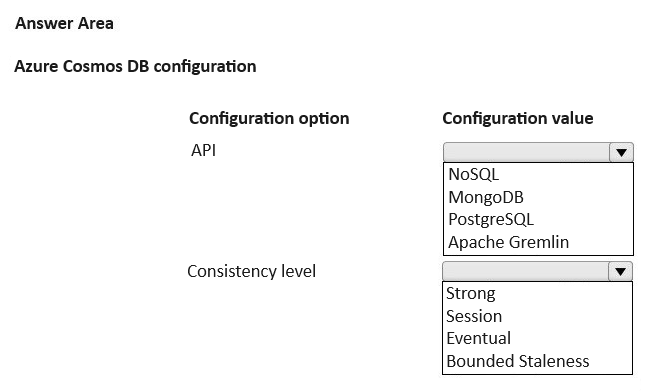

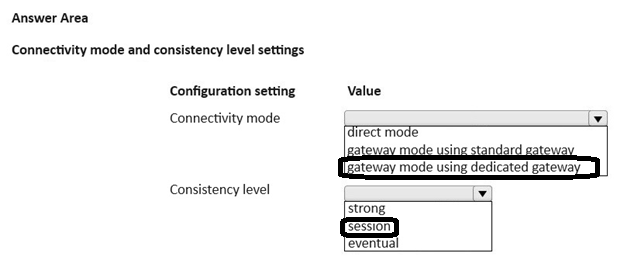

You have an Azure Cosmos DB for NoSQL API account named account1. Multiple instances of an on-premises application named app1 read data from account1.

You plan to implement integrated cache for connections from the instances of app to account1.

You need to set the connection mode and maximum consistency level of app1.

Which values should you use for the configuration settings? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

-

You have an Azure Cosmos DB for NoSQL API account named account1. Multiple instances of an on-premises application named app1 read data from account1.

You plan to implement integrated cache for connections from the instances of app to account1.

You need to set the connection mode and maximum consistency level of app1.

Which values should you use for the configuration settings? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Question #411

You are developing a Cosmos DB solution that will be deployed to multiple Azure regions.

Your solution must meet the following requirements:

• Read operations will never receive write operations that are out of order.

• Maximize concurrency of read operations in all regions.

You need to choose the consistency level for the solution.

Which consistency level should you use?

Your solution must meet the following requirements:

• Read operations will never receive write operations that are out of order.

• Maximize concurrency of read operations in all regions.

You need to choose the consistency level for the solution.

Which consistency level should you use?

Question #412

You have an Azure Queue Storage named queue1.

You plan to develop code that will process messages in queue1.

You need to implement a queue operation to set the visibility timeout value of individual messages in queue1.

Which two operations can you use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

You plan to develop code that will process messages in queue1.

You need to implement a queue operation to set the visibility timeout value of individual messages in queue1.

Which two operations can you use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

Question #413

HOTSPOT

-

Case study

-

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

-

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

-

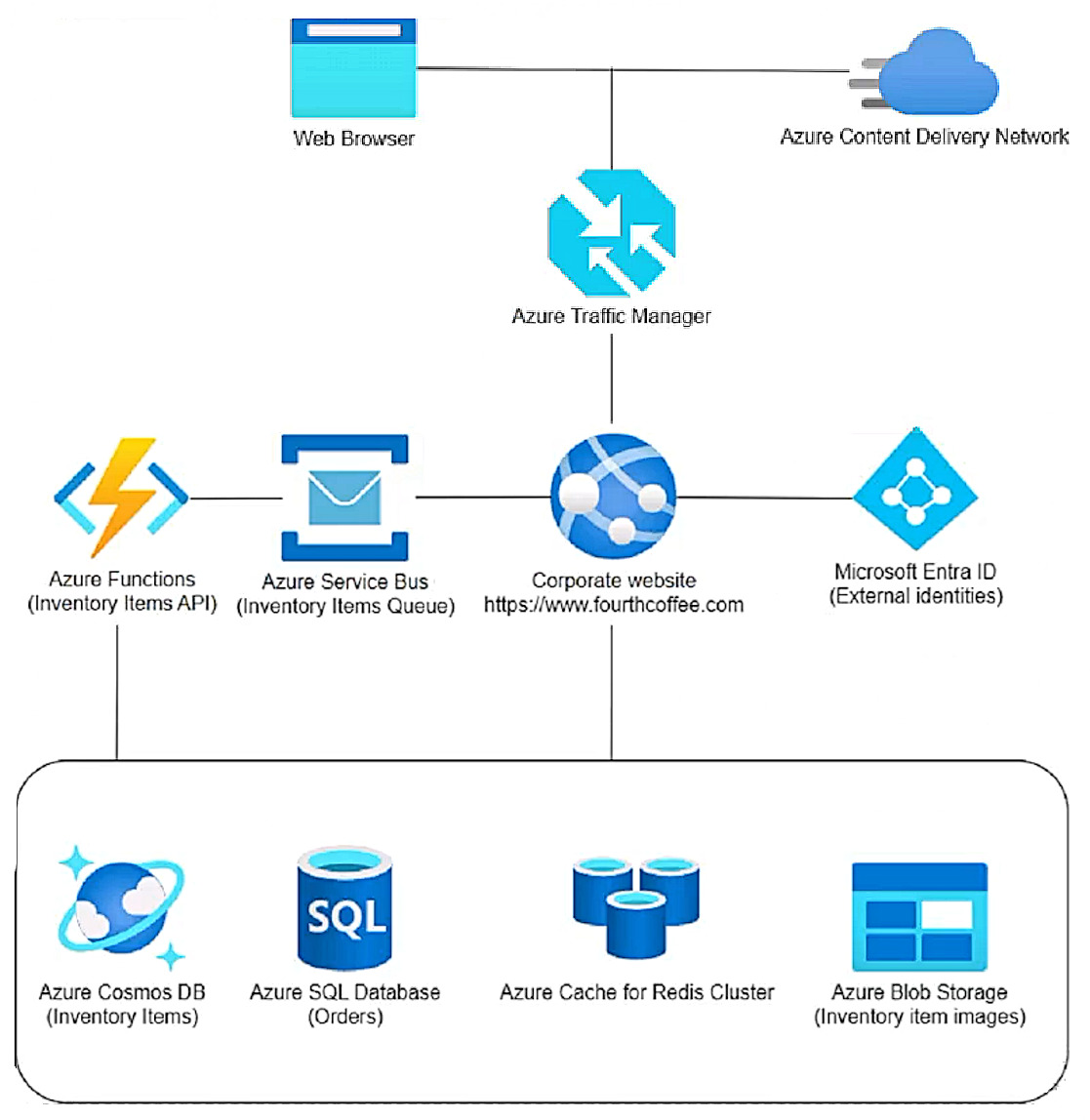

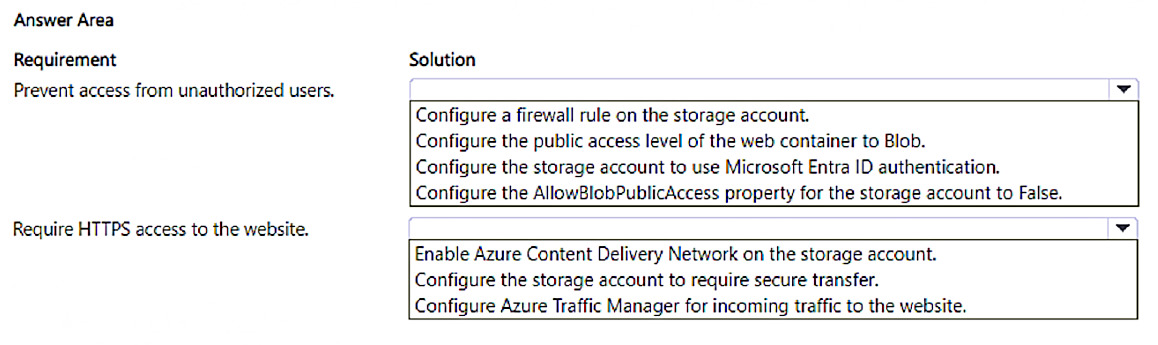

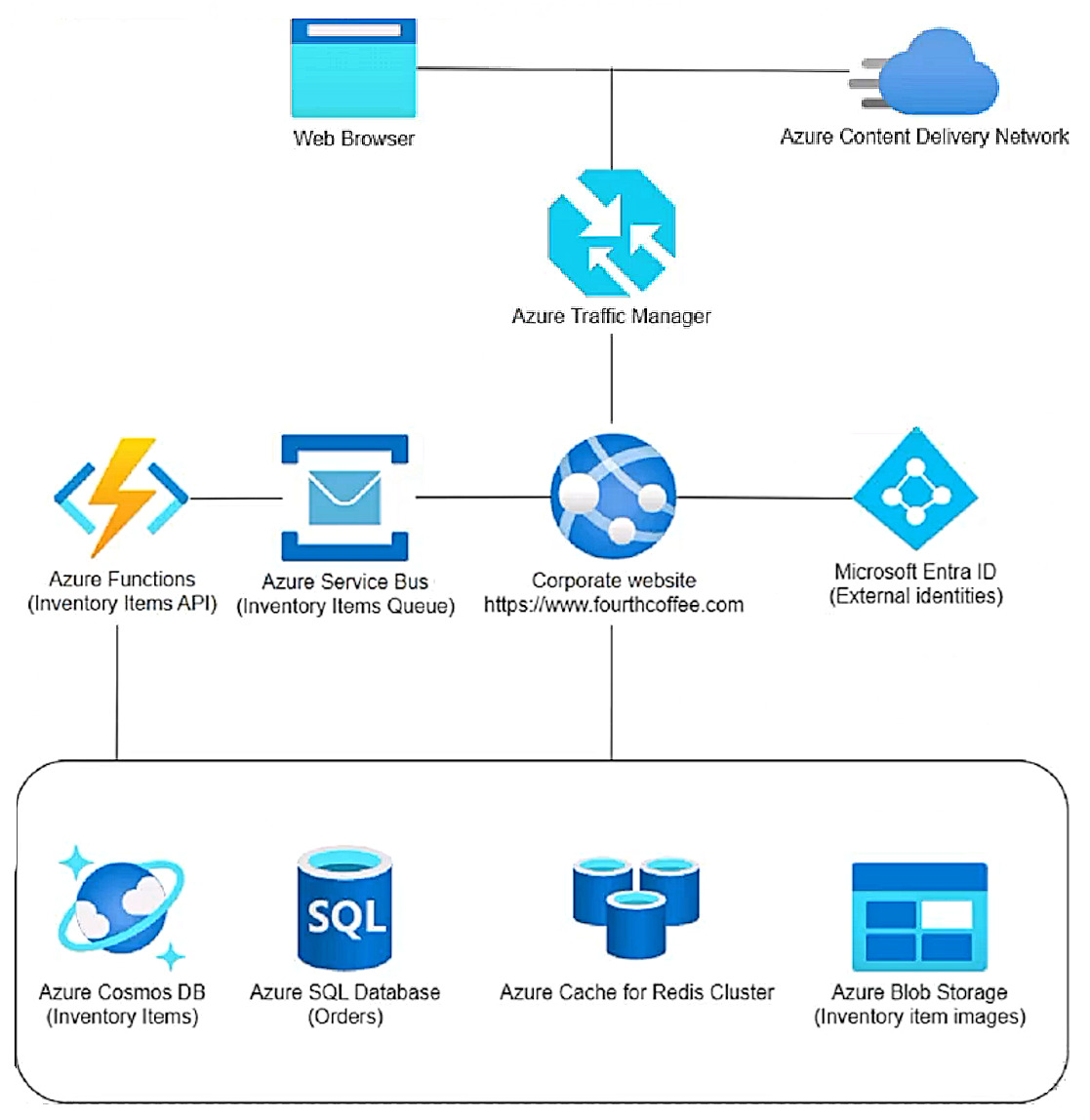

Fourth Coffee is a global coffeehouse chain and coffee company recognized as one of the world’s most influential coffee brands. The company is renowned for its specialty coffee beverages, including a wide range of espresso-based drinks, teas, and other beverages. Fourth Coffee operates thousands of stores worldwide.

Current environment

-

The company is developing cloud-native applications hosted in Azure.

Corporate website

-

The company hosts a public website located at http://www.fourthcoffee.com/. The website is used to place orders as well as view and update inventory items.

Inventory items

-

In addition to its core coffee offerings, Fourth Coffee recently expanded its menu to include inventory items such as lunch items, snacks, and merchandise. Corporate team members constantly update inventory. Users can customize items. Corporate team members configure inventory items and associated images on the website.

Orders

-

Associates in the store serve customized beverages and items to customers. Orders are placed on the website for pickup.

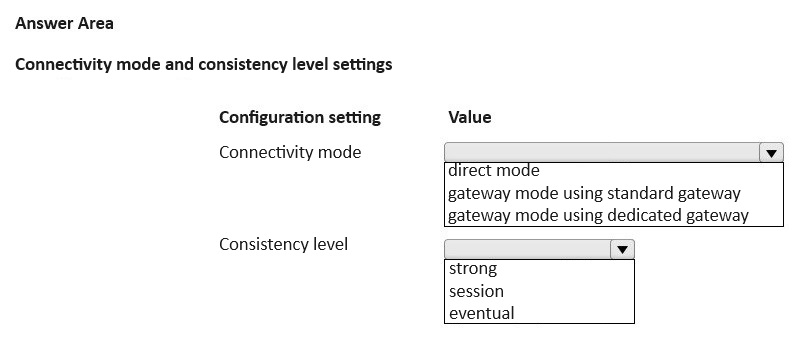

The application components process data as follows:

1. Azure Traffic Manager routes a user order request to the corporate website hosted in Azure App Service.

2. Azure Content Delivery Network serves static images and content to the user.

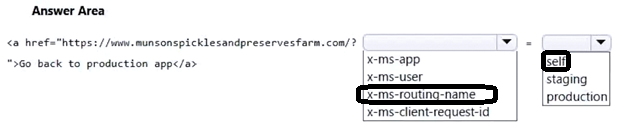

3. The user signs in to the application through a Microsoft Entra ID for customers tenant.

4. Users search for items and place an order on the website as item images are pulled from Azure Blob Storage.

5. Item customizations are placed in an Azure Service Bus queue message.

6. Azure Functions processes item customizations and saves the customized items to Azure Cosmos DB.

7. The website saves order details to Azure SQL Database.

8. SQL Database query results are cached in Azure Cache for Redis to improve performance.

The application consists of the following Azure services:

Requirements

-

The application components must meet the following requirements:

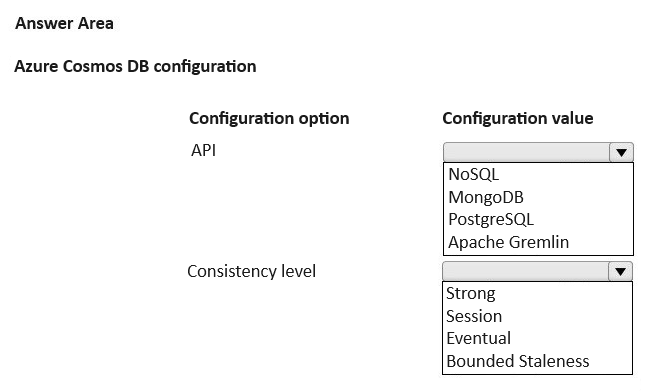

• Azure Cosmos DB development must use a native API that receives the latest updates and stores data in a document format.

• Costs must be minimized for all Azure services.

• Developers must test Azure Blob Storage integrations locally before deployment to Azure. Testing must support the latest versions of the Azure Storage APIs.

Corporate website

-

• User authentication and authorization must allow one-time passcode sign-in methods and social identity providers (Google or Facebook).

• Static web content must be stored closest to end users to reduce network latency.

Inventory items

-

• Customized items read from Azure Cosmos DB must maximize throughput while ensuring data is accurate for the current user on the website.

• Processing of inventory item updates must automatically scale and enable updates across an entire Azure Cosmos DB container.

• Inventory items must be processed in the order they were placed in the queue.

• Inventory item images must be stored as JPEG files in their native format to include exchangeable image file format (data) stored with the blob data upon upload of the image file.

• The Inventory Items API must securely access the Azure Cosmos DB data.

Orders

-

• Orders must receive inventory item changes automatically after inventory items are updated or saved.

Issues

-

• Developers are storing the Azure Cosmos DB credentials in an insecure clear text manner within the Inventory Items API code.

• Production Azure Cache for Redis maintenance has negatively affected application performance.

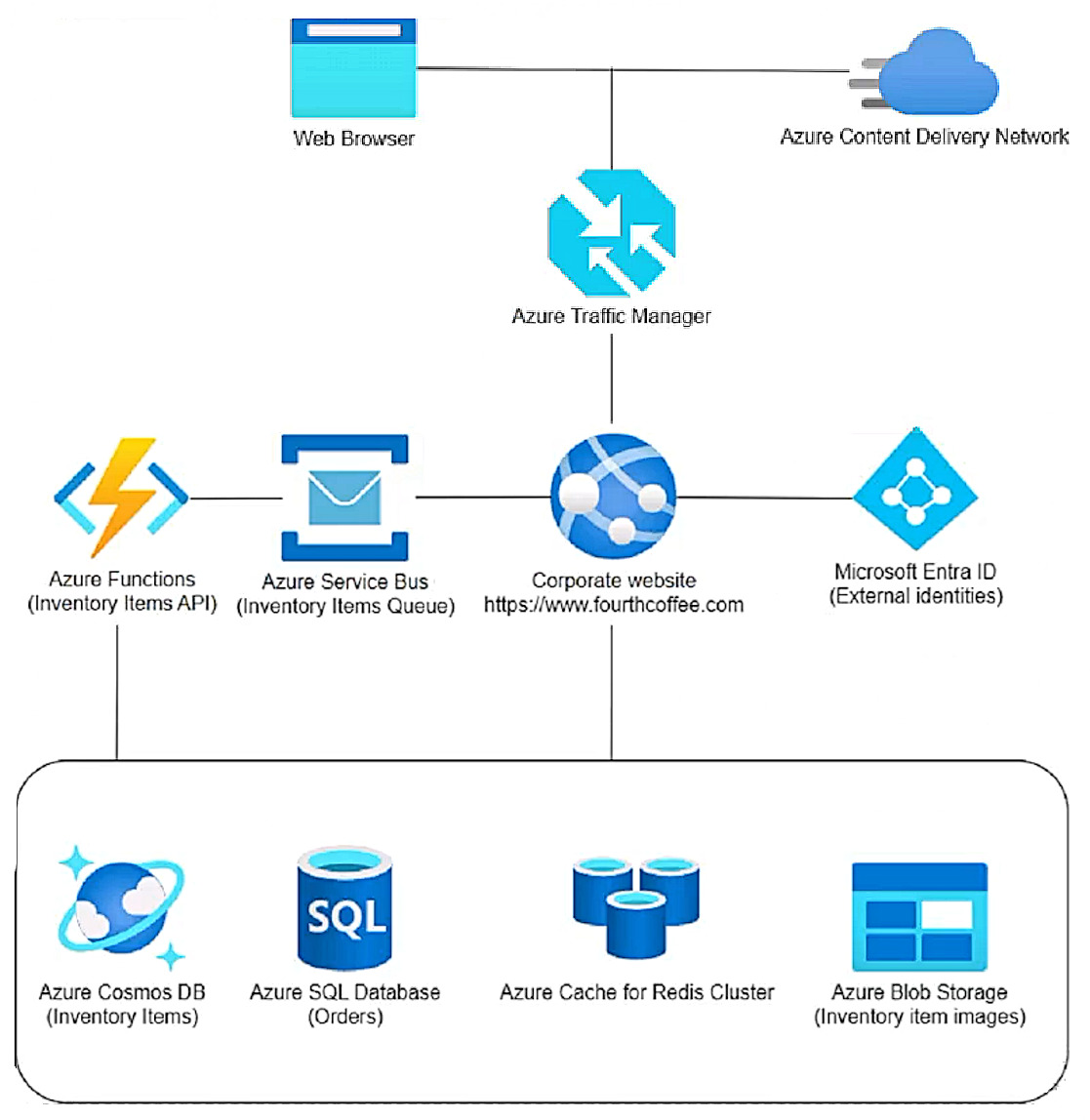

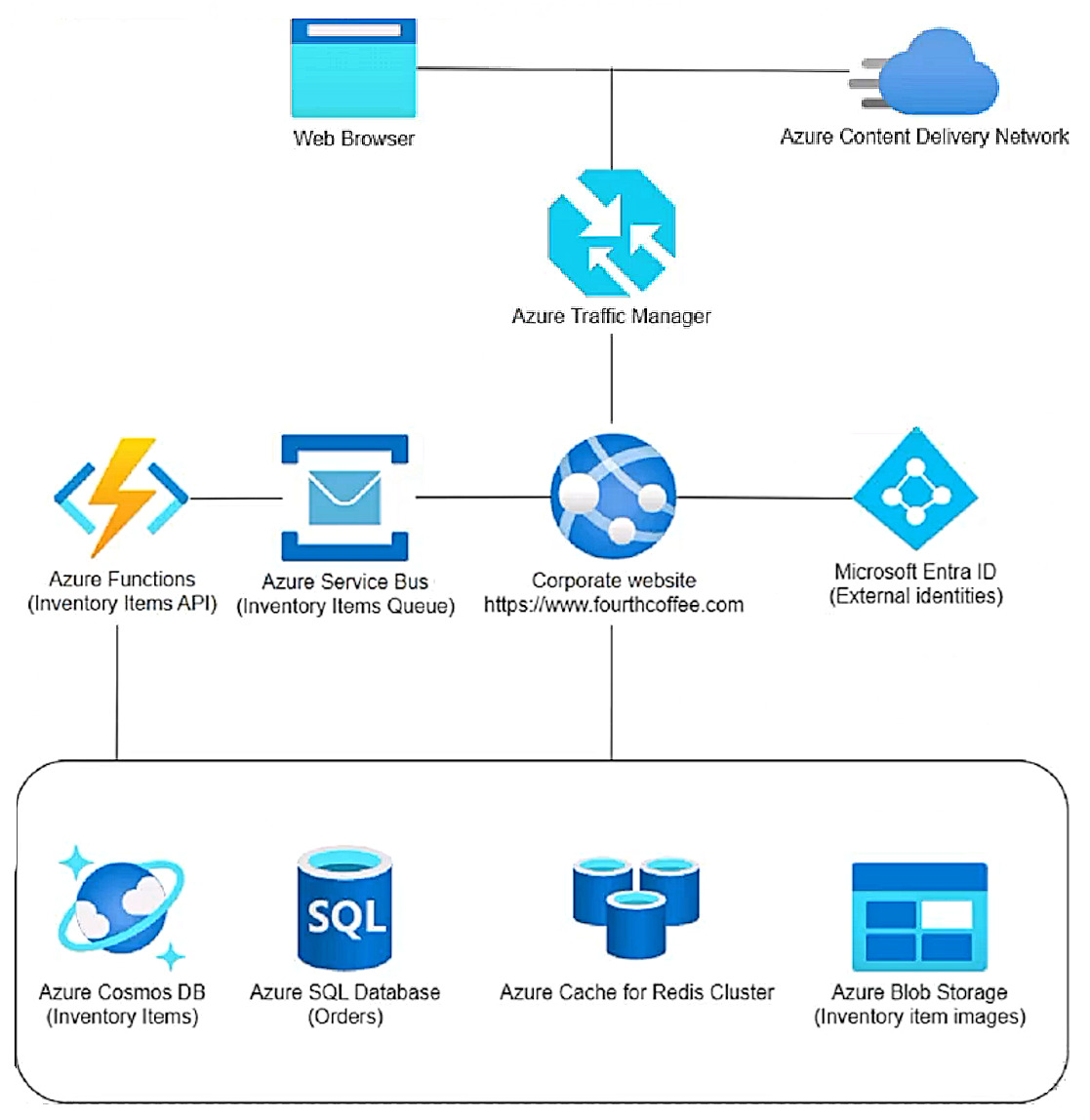

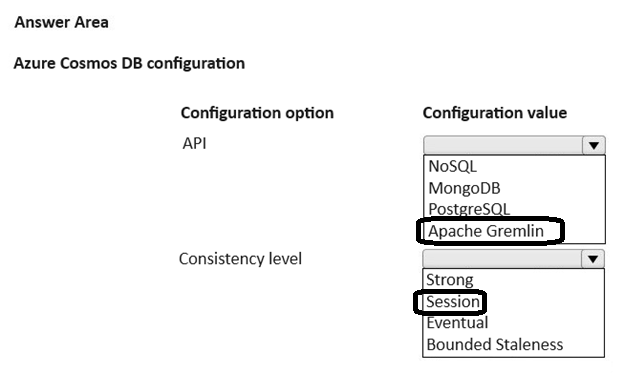

You need to save customized items to Azure Cosmos DB.

Which Azure Cosmos DB configuration should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

-

Case study

-

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

-

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

-

Fourth Coffee is a global coffeehouse chain and coffee company recognized as one of the world’s most influential coffee brands. The company is renowned for its specialty coffee beverages, including a wide range of espresso-based drinks, teas, and other beverages. Fourth Coffee operates thousands of stores worldwide.

Current environment

-

The company is developing cloud-native applications hosted in Azure.

Corporate website

-

The company hosts a public website located at http://www.fourthcoffee.com/. The website is used to place orders as well as view and update inventory items.

Inventory items

-

In addition to its core coffee offerings, Fourth Coffee recently expanded its menu to include inventory items such as lunch items, snacks, and merchandise. Corporate team members constantly update inventory. Users can customize items. Corporate team members configure inventory items and associated images on the website.

Orders

-

Associates in the store serve customized beverages and items to customers. Orders are placed on the website for pickup.

The application components process data as follows:

1. Azure Traffic Manager routes a user order request to the corporate website hosted in Azure App Service.

2. Azure Content Delivery Network serves static images and content to the user.

3. The user signs in to the application through a Microsoft Entra ID for customers tenant.

4. Users search for items and place an order on the website as item images are pulled from Azure Blob Storage.

5. Item customizations are placed in an Azure Service Bus queue message.

6. Azure Functions processes item customizations and saves the customized items to Azure Cosmos DB.

7. The website saves order details to Azure SQL Database.

8. SQL Database query results are cached in Azure Cache for Redis to improve performance.

The application consists of the following Azure services:

Requirements

-

The application components must meet the following requirements:

• Azure Cosmos DB development must use a native API that receives the latest updates and stores data in a document format.

• Costs must be minimized for all Azure services.

• Developers must test Azure Blob Storage integrations locally before deployment to Azure. Testing must support the latest versions of the Azure Storage APIs.

Corporate website

-

• User authentication and authorization must allow one-time passcode sign-in methods and social identity providers (Google or Facebook).

• Static web content must be stored closest to end users to reduce network latency.

Inventory items

-

• Customized items read from Azure Cosmos DB must maximize throughput while ensuring data is accurate for the current user on the website.

• Processing of inventory item updates must automatically scale and enable updates across an entire Azure Cosmos DB container.

• Inventory items must be processed in the order they were placed in the queue.

• Inventory item images must be stored as JPEG files in their native format to include exchangeable image file format (data) stored with the blob data upon upload of the image file.

• The Inventory Items API must securely access the Azure Cosmos DB data.

Orders

-

• Orders must receive inventory item changes automatically after inventory items are updated or saved.

Issues

-

• Developers are storing the Azure Cosmos DB credentials in an insecure clear text manner within the Inventory Items API code.

• Production Azure Cache for Redis maintenance has negatively affected application performance.

You need to save customized items to Azure Cosmos DB.

Which Azure Cosmos DB configuration should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Question #414

HOTSPOT

-

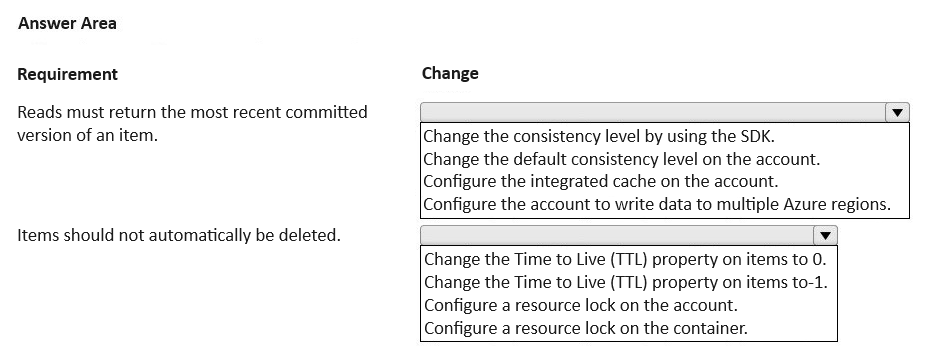

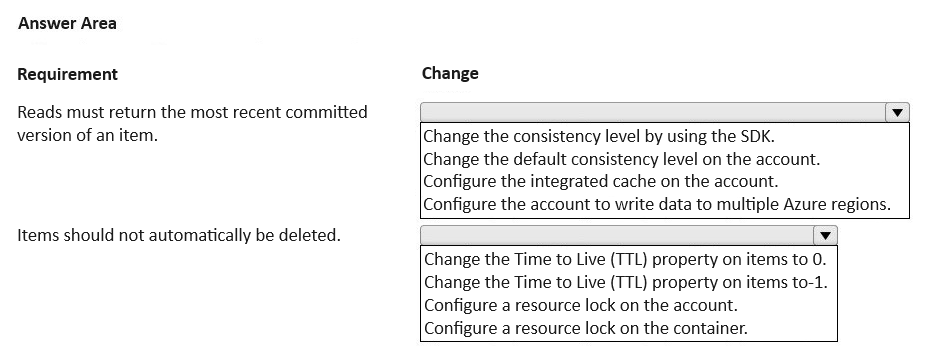

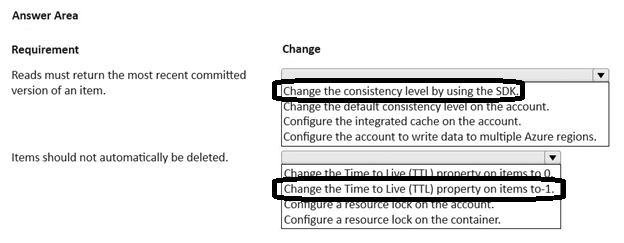

A company has an Azure Cosmos DB for NoSQL account. The account is configured for session consistency. Data is written to a single Azure region and data can be read from three Azure regions.

An application that will access the Azure Cosmos DB for NoSQL container data using an SDK has the following requirements:

• Reads from the application must return the most recent committed version of an item from any Azure region.

• The container items should not automatically be deleted.

You need to implement the changes to the Azure Cosmos DB for NoSQL account.

What should you do? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

-

A company has an Azure Cosmos DB for NoSQL account. The account is configured for session consistency. Data is written to a single Azure region and data can be read from three Azure regions.

An application that will access the Azure Cosmos DB for NoSQL container data using an SDK has the following requirements:

• Reads from the application must return the most recent committed version of an item from any Azure region.

• The container items should not automatically be deleted.

You need to implement the changes to the Azure Cosmos DB for NoSQL account.

What should you do? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Question #415

HOTSPOT

-

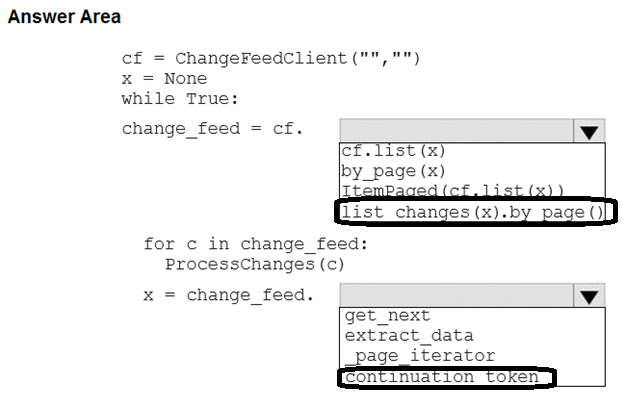

You are developing an application that monitors data added to an Azure Blob storage account.

You need to process each change made to the storage account.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

-

You are developing an application that monitors data added to an Azure Blob storage account.

You need to process each change made to the storage account.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Question #416

You manage an Azure Cosmos DB for a NoSQL API account named account1. The account contains a database named db1, which contains a container named container1. You configure account1 with a session consistency level.

You plan to develop an application named App1 that will access container1. Individual instances of App1 must perform reads and writes. App1 must allow multiple nodes to participate in the same session.

You need to configure an object to share the session token between the nodes.

Which object should you use?

You plan to develop an application named App1 that will access container1. Individual instances of App1 must perform reads and writes. App1 must allow multiple nodes to participate in the same session.

You need to configure an object to share the session token between the nodes.

Which object should you use?

Question #417

DRAG DROP

-

Case study

-

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

-

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

-

Fourth Coffee is a global coffeehouse chain and coffee company recognized as one of the world’s most influential coffee brands. The company is renowned for its specialty coffee beverages, including a wide range of espresso-based drinks, teas, and other beverages. Fourth Coffee operates thousands of stores worldwide.

Current environment

-

The company is developing cloud-native applications hosted in Azure.

Corporate website

-

The company hosts a public website located at http://www.fourthcoffee.com/. The website is used to place orders as well as view and update inventory items.

Inventory items

-

In addition to its core coffee offerings, Fourth Coffee recently expanded its menu to include inventory items such as lunch items, snacks, and merchandise. Corporate team members constantly update inventory. Users can customize items. Corporate team members configure inventory items and associated images on the website.

Orders

-

Associates in the store serve customized beverages and items to customers. Orders are placed on the website for pickup.

The application components process data as follows:

1. Azure Traffic Manager routes a user order request to the corporate website hosted in Azure App Service.

2. Azure Content Delivery Network serves static images and content to the user.

3. The user signs in to the application through a Microsoft Entra ID for customers tenant.

4. Users search for items and place an order on the website as item images are pulled from Azure Blob Storage.

5. Item customizations are placed in an Azure Service Bus queue message.

6. Azure Functions processes item customizations and saves the customized items to Azure Cosmos DB.

7. The website saves order details to Azure SQL Database.

8. SQL Database query results are cached in Azure Cache for Redis to improve performance.

The application consists of the following Azure services:

Requirements

-

The application components must meet the following requirements:

• Azure Cosmos DB development must use a native API that receives the latest updates and stores data in a document format.

• Costs must be minimized for all Azure services.

• Developers must test Azure Blob Storage integrations locally before deployment to Azure. Testing must support the latest versions of the Azure Storage APIs.

Corporate website

-

• User authentication and authorization must allow one-time passcode sign-in methods and social identity providers (Google or Facebook).

• Static web content must be stored closest to end users to reduce network latency.

Inventory items

-

• Customized items read from Azure Cosmos DB must maximize throughput while ensuring data is accurate for the current user on the website.

• Processing of inventory item updates must automatically scale and enable updates across an entire Azure Cosmos DB container.

• Inventory items must be processed in the order they were placed in the queue.

• Inventory item images must be stored as JPEG files in their native format to include exchangeable image file format (data) stored with the blob data upon upload of the image file.

• The Inventory Items API must securely access the Azure Cosmos DB data.

Orders

-

• Orders must receive inventory item changes automatically after inventory items are updated or saved.

Issues

-

• Developers are storing the Azure Cosmos DB credentials in an insecure clear text manner within the Inventory Items API code.

• Production Azure Cache for Redis maintenance has negatively affected application performance.

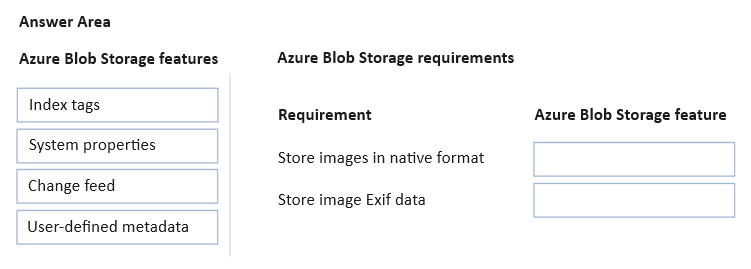

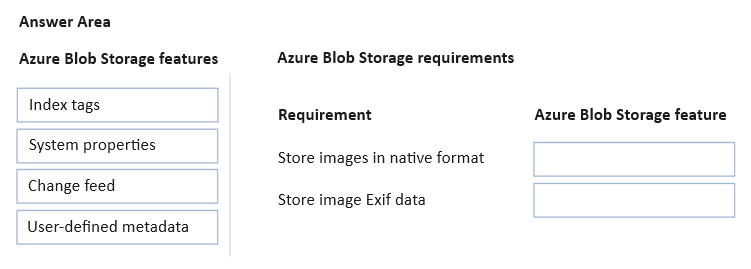

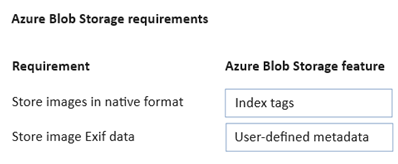

You need to store inventory item images.

Which Azure Blob Storage feature should you use? To answer, move the appropriate Azure Blob Storage features to the correct requirements. You may use each Azure Blob Storage feature once, more than once, or not at all. You may need to move the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

-

Case study

-

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

-

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

-

Fourth Coffee is a global coffeehouse chain and coffee company recognized as one of the world’s most influential coffee brands. The company is renowned for its specialty coffee beverages, including a wide range of espresso-based drinks, teas, and other beverages. Fourth Coffee operates thousands of stores worldwide.

Current environment

-

The company is developing cloud-native applications hosted in Azure.

Corporate website

-

The company hosts a public website located at http://www.fourthcoffee.com/. The website is used to place orders as well as view and update inventory items.

Inventory items

-

In addition to its core coffee offerings, Fourth Coffee recently expanded its menu to include inventory items such as lunch items, snacks, and merchandise. Corporate team members constantly update inventory. Users can customize items. Corporate team members configure inventory items and associated images on the website.

Orders

-

Associates in the store serve customized beverages and items to customers. Orders are placed on the website for pickup.

The application components process data as follows:

1. Azure Traffic Manager routes a user order request to the corporate website hosted in Azure App Service.

2. Azure Content Delivery Network serves static images and content to the user.

3. The user signs in to the application through a Microsoft Entra ID for customers tenant.

4. Users search for items and place an order on the website as item images are pulled from Azure Blob Storage.

5. Item customizations are placed in an Azure Service Bus queue message.

6. Azure Functions processes item customizations and saves the customized items to Azure Cosmos DB.

7. The website saves order details to Azure SQL Database.

8. SQL Database query results are cached in Azure Cache for Redis to improve performance.

The application consists of the following Azure services:

Requirements

-

The application components must meet the following requirements:

• Azure Cosmos DB development must use a native API that receives the latest updates and stores data in a document format.

• Costs must be minimized for all Azure services.

• Developers must test Azure Blob Storage integrations locally before deployment to Azure. Testing must support the latest versions of the Azure Storage APIs.

Corporate website

-

• User authentication and authorization must allow one-time passcode sign-in methods and social identity providers (Google or Facebook).

• Static web content must be stored closest to end users to reduce network latency.

Inventory items

-

• Customized items read from Azure Cosmos DB must maximize throughput while ensuring data is accurate for the current user on the website.

• Processing of inventory item updates must automatically scale and enable updates across an entire Azure Cosmos DB container.

• Inventory items must be processed in the order they were placed in the queue.

• Inventory item images must be stored as JPEG files in their native format to include exchangeable image file format (data) stored with the blob data upon upload of the image file.

• The Inventory Items API must securely access the Azure Cosmos DB data.

Orders

-

• Orders must receive inventory item changes automatically after inventory items are updated or saved.

Issues

-

• Developers are storing the Azure Cosmos DB credentials in an insecure clear text manner within the Inventory Items API code.

• Production Azure Cache for Redis maintenance has negatively affected application performance.

You need to store inventory item images.

Which Azure Blob Storage feature should you use? To answer, move the appropriate Azure Blob Storage features to the correct requirements. You may use each Azure Blob Storage feature once, more than once, or not at all. You may need to move the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

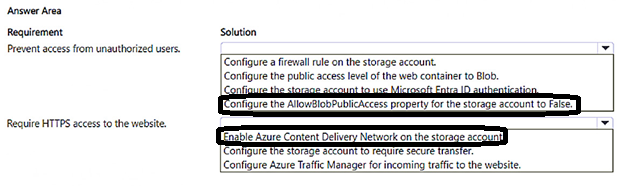

Question #418

HOTSPOT

-

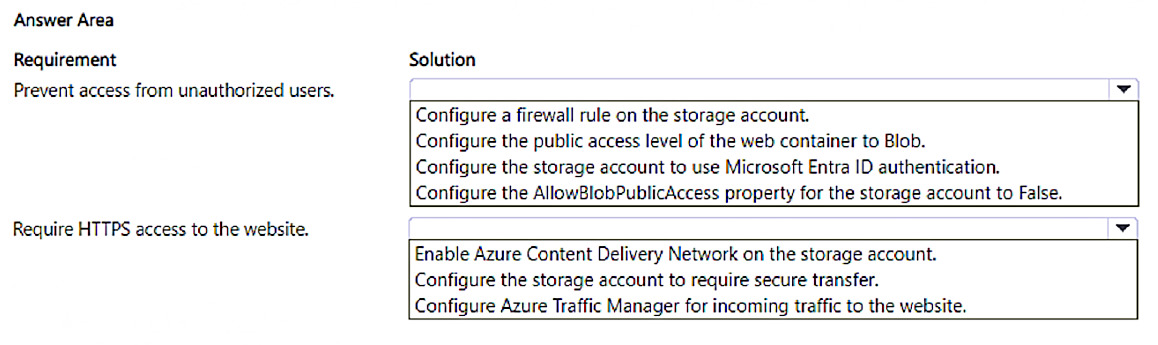

A company has an Azure storage static website with a custom domain name.

The company informs you that unauthorized users from a different country/region are accessing the website. The company provides the following requirements for the static website:

• Unauthorized users must not be able to access the website.

• Users must be able to access the website using the HTTPS protocol.

You need to implement the changes to the static website.

What should you do? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

-

A company has an Azure storage static website with a custom domain name.

The company informs you that unauthorized users from a different country/region are accessing the website. The company provides the following requirements for the static website:

• Unauthorized users must not be able to access the website.

• Users must be able to access the website using the HTTPS protocol.

You need to implement the changes to the static website.

What should you do? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Question #419

DRAG DROP

-

Case study

-

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

-

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

-

Fourth Coffee is a global coffeehouse chain and coffee company recognized as one of the world’s most influential coffee brands. The company is renowned for its specialty coffee beverages, including a wide range of espresso-based drinks, teas, and other beverages. Fourth Coffee operates thousands of stores worldwide.

Current environment

-

The company is developing cloud-native applications hosted in Azure.

Corporate website

-

The company hosts a public website located at http://www.fourthcoffee.com/. The website is used to place orders as well as view and update inventory items.

Inventory items

-

In addition to its core coffee offerings, Fourth Coffee recently expanded its menu to include inventory items such as lunch items, snacks, and merchandise. Corporate team members constantly update inventory. Users can customize items. Corporate team members configure inventory items and associated images on the website.

Orders

-

Associates in the store serve customized beverages and items to customers. Orders are placed on the website for pickup.

The application components process data as follows:

1. Azure Traffic Manager routes a user order request to the corporate website hosted in Azure App Service.

2. Azure Content Delivery Network serves static images and content to the user.

3. The user signs in to the application through a Microsoft Entra ID for customers tenant.

4. Users search for items and place an order on the website as item images are pulled from Azure Blob Storage.

5. Item customizations are placed in an Azure Service Bus queue message.

6. Azure Functions processes item customizations and saves the customized items to Azure Cosmos DB.

7. The website saves order details to Azure SQL Database.

8. SQL Database query results are cached in Azure Cache for Redis to improve performance.

The application consists of the following Azure services:

Requirements

-

The application components must meet the following requirements:

• Azure Cosmos DB development must use a native API that receives the latest updates and stores data in a document format.

• Costs must be minimized for all Azure services.

• Developers must test Azure Blob Storage integrations locally before deployment to Azure. Testing must support the latest versions of the Azure Storage APIs.

Corporate website

-

• User authentication and authorization must allow one-time passcode sign-in methods and social identity providers (Google or Facebook).

• Static web content must be stored closest to end users to reduce network latency.

Inventory items

-

• Customized items read from Azure Cosmos DB must maximize throughput while ensuring data is accurate for the current user on the website.

• Processing of inventory item updates must automatically scale and enable updates across an entire Azure Cosmos DB container.

• Inventory items must be processed in the order they were placed in the queue.

• Inventory item images must be stored as JPEG files in their native format to include exchangeable image file format (data) stored with the blob data upon upload of the image file.

• The Inventory Items API must securely access the Azure Cosmos DB data.

Orders

-

• Orders must receive inventory item changes automatically after inventory items are updated or saved.

Issues

-

• Developers are storing the Azure Cosmos DB credentials in an insecure clear text manner within the Inventory Items API code.

• Production Azure Cache for Redis maintenance has negatively affected application performance.

You need to secure the corporate website for users.